|

One possibility to overcome the processing limitation of the visual system is to attend selectively to relevant information only. Another strategy is to process sets of objects as ensembles and represent their average characteristics instead of individual group members (e.g., mean size, brightness, orientation). Recent evidence suggests that ensemble representation might occur even for human faces (for a summary, see Alvarez, 2011), i.e., observers can extract the mean emotion, sex, and identity from a set of faces (Habermann & Whitney, 2007; de Fockert & Wolfenstein, 2009). Here, we extend this line of research into the realm of face race: Can we extract the "mean race" of a set of faces when no conscious perception of single individuals is possible? Moreover, does the visual system process own- and other-race faces differently at this stage? In this project we are trying to answer these question by behavior experiment using method of psychophysics. [ Jung W, Bülthoff I, Armann RGM and Bülthoff HH ]

In the human somatosensory network, somatic sensory information is relayed from the thalamus to primary somatosensory cortex (S1), and then distributed to adjacent cortical regions to perform further cognitive functions. In this project, we show that passive vibrotactile stimulus locations on fingers can be discriminated from measurements of human functional magnetic resonance imaging (fMRI) data (3T). Our findings are well in line with prior studies showing generic activity in contralateral posterior parietal cortex (PPC), contralateral primary and secondary somatosensory cortex (S1, S2). We project two cases: (a) discrimination between the locations of finger stimulation, and (b) discrimination of finger stimulation vs. no stimulation both using standard general linear model (GLM) and searchlight multi-voxel pattern analysis (MVPA) techniques. While in case (b) we find significant cortical activations in S1 (along with reliable somatotopic maps), not in PPC and S2, it is in case (a) where we interestingly observe activity in contralateral PPC and S2 but not in S1. Our results support the general understanding that S1 is the main sensory receptive area for the sense of touch. Adjacent cortical regions, in particular, PPC and S2 are in charge of higher level of processing and may thus contribute most for the successful decoding between stimulated finger locations. [ Kim J, Müller KR, Kim S and Bülthoff HH ]

We can quickly and easily determine the ethnicity (race) of a face. What is the basis for our decision? We paired Asian and Caucasian faces according to sex and age. One of four facial areas was exchanged between the faces of each pair: the nose, the mouth, the eyes (inner areas) or the area surrounding those features (outer part of the face). Additionally, we also exchanged the whole shape or the whole texture (skin, color information) of the faces. Korean and German participants had to classify all faces as Asian or Caucasian. Changing the ethnicity of the mouth, the nose, the outer part or the shape does not affect the ethnicity classification of the whole face. Altering the ethnicity of the eyes or the texture of the face changes the perceived ethnicity. These results were similar for both groups of participants, although East-Asians and Westerners exhibit different viewing patterns when scrutinizing faces. [ Bülthoff I, Armann RGM, Jung W and Bülthoff HH ]

The visual system can only accurately represent a few objects at once. One possibility to overcome this limitation is selective attention: Only the most relevant incoming information is processed. Another strategy is to represent sets of objects as a group or ensemble and represent some average characteristic of the whole set. Recent evidence suggests that the visual system can not only rapidly extract summary statistics from complex scenes ? but that ensemble coding extends even to representing human faces. Observers can extract the mean emotion, sex, and even a mean identity from a given set of faces. Recent results have also shown that the visual system in fact, implicitly and unintentionally, captures the enormous amount of information in the stimulus set, in that it discounts emotional outliers in a set of expressions. Here, we extend this question to the representation of face race: Does the visual system distinguish between own- and other-race faces at this early processing stage? And if so, are other-race faces taken into account to extract a summary representation? Or are they ? or, rather, the outnumbered race in a given set of faces ? detracted as outliers? [ Armann RGM, Thornton I, Bülthoff I and Bülthoff HH ]

East-Asians and Westerners exhibit a culture difference in perception. In this study, we investigate the role of culture in the weighting of local and global dimensions of visual similarity. East-Asian and Western participants view sets of 3 objects. In each set, the first object shares the same local features as the second object and the same global features as the third object. Participant task is to choose which of object two and object three is more similar to the first object. [ Bülthoff I, Armann RGM, Laurent E , Lavaux M, Jung W and Bülthoff HH ]

Here, we investigate how the representation and encoding of higher-cognitive (and presumably culturally defined) characteristics of faces (e.g., trustworthiness, as opposed to more hardcoded biological characteristics such as sex) differ between faces of own compared to faces of an unfamiliar race. Moreover, we want to know what the differences are between representations of observers of different ethnic and cultural backgrounds. To study this, we are using high-level face adaptation methods and functional MRI. [ Armann RGM, Bülthoff I, Wallraven C and Bülthoff HH ]

Basic emotional states can be similarly conveyed by the face, the body movement, and the emotional voice. It has been assumed that this information is used and integrated into a coherent percept of emotional expression. Nevertheless, most previous research dealt with only one stimulus modality at a time. Although this yields information about the neural pathways that are specific to processing emotional cues from each single modality, the questions remain: how is information from different modalities integrated (multimodally), and are emotions also coded at a more abstract level beyond modality-specific stimulus characteristics (supramodally)? To address this question, non-emotional symbol associated with facial expression, body movements, or vocal intonations will be presented to participants, while their brain activity was measured with functional magnetic resonance imaging (fMRI). [ Kim J, Bülthoff I, Armann RGM and Bülthoff HH ]

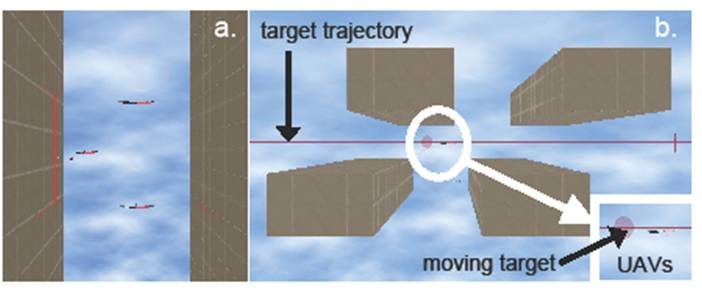

Multiple mobile robots, such as unmanned aerial vehicles can be deployed in teleoperation scenarios such as surveillance. This is preferred to the use of a single robot for several reasons, including their robustness to system failures. This work presents a control architecture for mUAVs that is focused on human teleoperation performance. Specifically, this system is designed to enhance the teleoperator's perceptual awareness of the remote UAV environment by providing appropriately designed haptic cues that do not increase control effort. Our psychophysical evaluations demonstrate that providing haptic cues increases the teleoperator's sensitivity to the remote environment, especially for haptic cues that are based on the UAVs' velocity information. However, accurate maneuverability of UAVs requires more effort for such cues. A reasonable trade-off between improved perceptual awareness and control effort can be achieved by using haptic cues that are based on the presence of nearby obstacles instead. [ Son HI, Chuang LL, Kim J and Bülthoff HH ]

With a rather straightforward and simple experimental design, we tried to clarify whether Asian and Caucasian observers do indeed look at face stimuli in different ways, and whether these differences are task-dependent. Observers from Seoul looked at single unfamiliar face stimuli (Asian/Caucasian, male/female) on a computer screen and were asked to rate different characteristics of each face (e.g., attractiveness, likeability, intelligence), while their eye-movements were recorded using a remote eye-tracking system (a T60XL Tobii, running on a Mac via the talk2tobii toolbox). Later, the same experiment was repeated in Tubingen, with Caucasian participants. The results are being analyzed with respect to fixations to areas of interest on the face stimuli, i.e., eyes, nose, mouth, etc. The data for Asian and Caucasian participants is compared, as well as results from within each group of participants, between Asian/Caucasian and male/female face stimuli. [ Armann RGM, Bülthoff I, Jung W and Bülthoff HH ]

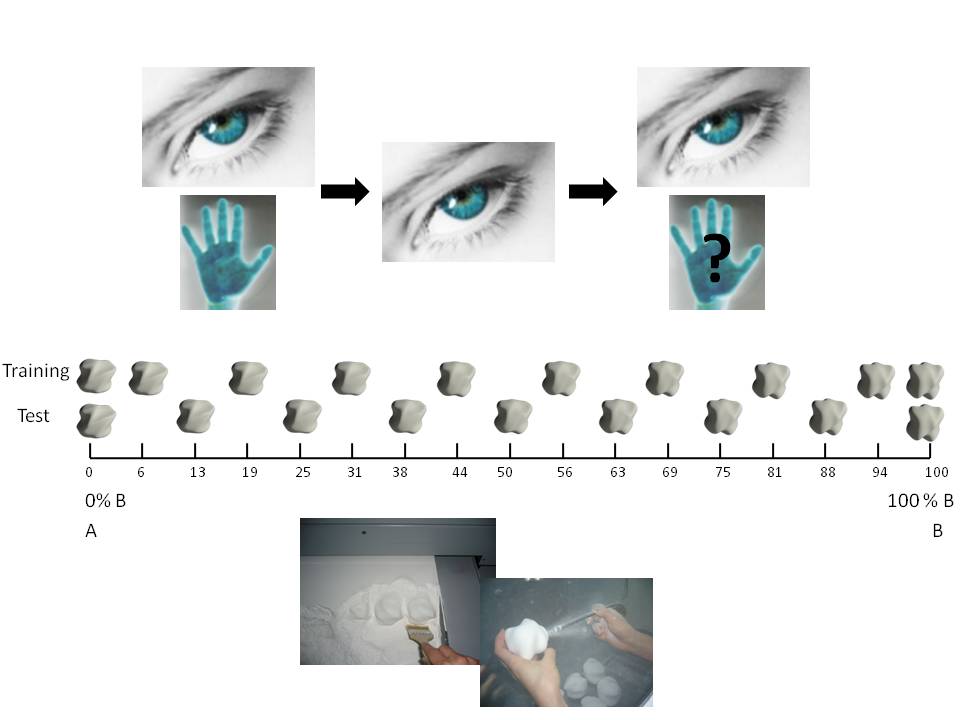

Humans learn about their environment using all senses - research in psychology, however, has mostly focused on the visual sense neglecting, for example, the role of touch in the development of perceptual skills such as object recognition. Here, we use rapid prototyping technology such as a 3D printer to create well-defined, parametric spaces of objects that people can view and touch. These parameter spaces make it possible to precisely determine which physical properties (such as shape, texture, material, etc.) are important for processing objects perceptually in the visual and haptic modalities - separately and combined. The results so far indicate that our sense of touch is much more precise in processing complex shape than previously thought (Cooke et al., 2007; Gaissert et al., 2008). [ Gaissert N, Bülthoff I and Bülthoff HH ]

The general aim of the project was to investigate the perceptual and cognitive mechanism underlying social interaction using a natural everyday life task. Therefore, we designed an immersive virtual environment in which participants played table tennis with a virtual opponent while visual information about the opponents paddle and body was manipulated systematically. This setup allowed us to determine whether visual information was used to derive physical predictions or predictions about the intentions of the interaction partner. We found that different sources of visual information were used for the prediction of different features (intentions, physics). The investigation of the mechanisms underlying social interactions will deepen our knowledge about the influence of social components on our daily lives (e.g. social interactions and learning) and will help to develop systems which are capable to interact with humans in a natural and sophisticated way. [ Streuber S and Bülthoff HH ]

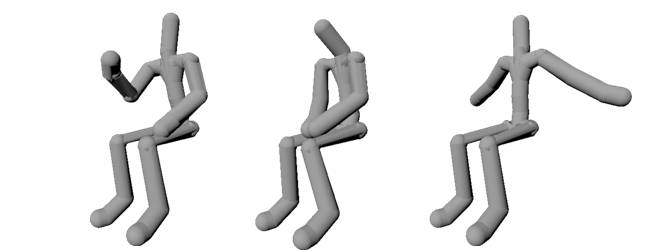

Cultural background is one of the major factors that influence emotional perception. It is well established that some facial expressions are accurately identified across many different cultures. But does the same hold true for the emotions expressed through the body language? For our experiment we used captured motion of a seated actor for the following emotional categories: amusement, joy, pride, relief, surprise, anger, disgust, fear, sadness, shame. The motion was rendered as an animated stick figure (see below figure). The participants were asked to identify the emotion expressed by the actor, and to characterize it in terms of valence, arousal, dominance. [ Volkova EP, Mohler BJ and Bülthoff HH ]

In psychology, there is a well-known effect called the "other-race effect", which states that people of a different ethnic background to our own seem to "all look alike" making it difficult for a Westerner to, for example, tell Asian people apart. Physically, however, faces from different ethnicities possess similar variation in shape and texture. One of the possible explanations for why this effect is observed is the "face space": faces of one's own ethnic background are spread out in this space and thus are easy to tell apart, whereas faces of different ethnic background are clustered close together making them harder to tell apart. With increased exposure to different faces, however, the perceptual system begins to extract the right features to be able to make distinctions between the faces easier, causing the effect to disappear. In this project, we will use the morphable model created from the Korean and German face databases to create ethnic morphs between the two nationalities to investigate the "other-race effect" for both ethnic backgrounds in more detail. [ Lee RK, Bülthoff I, Armann RGM and Bülthoff HH ]

|

|